Artificial-intelligence seems like it can only make our lives easier, right? What could possibly go wrong? Self-driving cars, Siri, Google Now, and computer-champions of Jeopardy! just prove that the progression Artificial-intelligence (AI) has made our lives a whole lot more convenient, comfortable, and definitely farther advanced with technology. The possibilities that AI offers are endless; everything we achieve is due to human intelligence, which AI magnifies. The end of war, poverty, disease, and altogether any cause for the demise of humans would be the top goal for AI once it is fully developed.

World militaries have already set their sights on advancing AI to its maximum, and some have even considered producing autonomous-weapons that can eliminate targets accurately and on command. The UN and Human Rights Watch have foreseen the disaster that the autonomous-weapon systems could procure and have campaigned for a treaty against these weapons. Once AI begins to develop and advance even further, there will be no end; while AI depends on human control now, the future holds the question of whether AI can be controlled at all. Even with all these insurmountable risks and benefits on the line, very little research is being conducted at the non-profit institutes such as the Future of Humanity Institute, the Cambridge Centre for the Study of Existential Risk, and the Future of Life Institute.

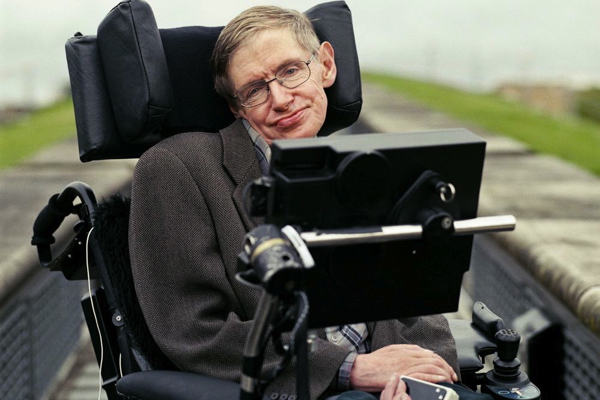

The major concern with AI is that it will eventually outsmart all of mankind. A study from Oxford University reveals that in the next twenty years, computers will steal the jobs of 45% of Americans. Stephen Hawking, with great foresight, suggests that we all think before create. Hawking believes that “All of us should ask ourselves what we can do now to improve the chances of reaping the benefits and avoiding the risks.”