By Philip Baillargeon

In the aftermath of the 2016 election and through the impeachment of President Trump (which seems like a lifetime ago but took place shortly after this year began), foreign interference and misinformation campaigns were the main topic of concern ahead of the 2020 election. While coronavirus may take that mantle this year, election misinformation is incredibly harmful to our democratic institutions and it hasn’t gone away for the time being like school dances or crowded movie theaters. Whether foreign interference from Russia or Iran, or domestic misinformation coming from the White House, social media companies have been put in an uncomfortable position; how do you police millions and millions of users to prevent the spread of lies, and how do you label such misinformation when it comes from public figures like the president?

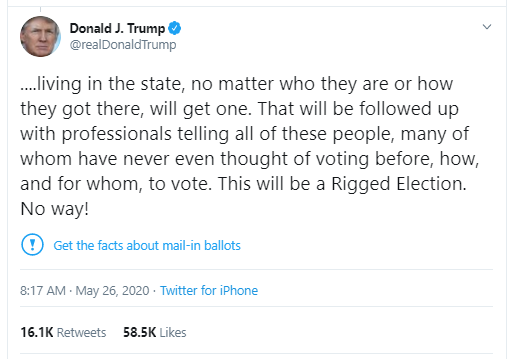

Twitter has been at the forefront of this operation in an attempt to save face after the cesspool that was the 2016 election. If you’re a frequent user of the site, you may have seen occasional banners that cover up or flag misleading content, whether it concerns the coronavirus or the upcoming election. These notices link to reputable news sources that provide fact-checked information about hot button issues like mail-in voting. Furthermore, these flagged tweets cannot be retweeted without comment, replied to, or recommended to users through their algorithm, effectively isolating the tweet in its timeline, dead on arrival. However, there’s a reason that companies have been reluctant to flag or censor messages; it’s a slippery slope to slide down. Twitter has had to make difficult decisions about flagging tweets from public figures, blocking calls for violence after the election, and premature declarations of victory before all votes are counted. What they block and what they don’t is a decision that must be made quickly and could have harmful consequences to the company.

Facebook cites voter intimidation, rather than pure misinformation, as their reasoning for beginning to flag posts before the 2020 election. This makes sense if you have been following Facebook’s stance on misinformation; Mark Zuckerberg, chief executive of Facebook, has made repeated public statements defending the platform’s allowance of Holocaust denial and anti-vaccination ads, which they have only recently started to remove. They also have gone about policing politicians differently; after polls close on November 3rd, there will be no political ads on Facebook indefinitely. Instead of focusing on the leadup to the election (of which the window to make substantial action to combat misinformation has rapidly closed), Facebook has resolved to protect the integrity of election results and prevent candidates from declaring victory prematurely. Facebook has always been the less proactive company compared to Twitter, so this strategy makes total sense coming from their platform. While they have acknowledged the damage done in 2016 through their platform, either in an attempt to appease political figures, an unwillingness to make substantial change, or a desire to keep scandalous, viral content that generates ad revenue on the platform, Facebook’s capacity to handle misinformation is relatively the same as it was in 2016.

As a voter, or in a household with voters, it is important to understand that, due to the size and scope of these platforms, no measure can be taken to flag or contextualize all misinformation on these platforms. Please make sure to cross-reference all claims made on social media through reputable publications and encourage friends and family to do the same. While there may be a sea of misinformation out there, quality information is always not too far away.